Back in June on a lark, or maybe it was some nostalgia kick induced by an article I read somewhere, I wanted to see if SETI@Home . This was a system designed by UC Berkley around 2000 to turn spare CPU cycles on otherwise idle computers into a massive distributed computing infrastructure. It turns out that not only does it still exist but in the nearly 20 years I hadn’t been paying attention it turned into a giant ecosystem of so-called “Volunteer Computing” (VC) called Berkeley Open Infrastructure for Network Computing (BOINC) .

The premise of these systems is simple but the implementation is of course more complicated. Our computers sit there most of the day running but with nothing to do. The being BOINC and other VC projects is to take those spare cycles and put them against embarassingly parallel scientific computing work loads. In the local sense each user is only contributing a little bit towards the compute infrastructure but across hundreds of thousands of active users it makes a big difference. At the time of this writing the entire BOINC ecosystem averaged nearly 27 PFLOPS. To put that into perspective that would place it in the #5 position on the Top 500 list of the most powerful supercomputers in the world. The how of it is complicated and I don’t want to get into the minutia of it here. This paper does a good job of it. I want to concentrate on the practical side of it for me.

While I started this as a quest to see if SETI@Home was still there and to maybe contribute some spare cycles to it I’ve grown far beyond that at this point. It wasn’t long after SETI@Home had gotten off the ground that they decided to create a more generic distributed computing framework. This has allowed dozens of projects to use these aggregated compute resources. A list of about three dozen of the active projects can be found on BOINC’s website here . It’s pretty stupendous really. There are plenty of projects I couldn’t care less about but more than a dozen that I actually wanted to contribute resources to. BOINC does provide some guidance on how to help select a project as it says at the link above “think about”:

- Does the project clearly describe its goals? Are these goals important and beneficial?

- Has the project published results in peer-reviewed journals or conferences? See A list of scientific publications of BOINC projects.

- Do you trust the project to use proper security practices?

- Who owns the results of the computation? Will they be freely available? Will they belong to a company?

I ended up whittling away my list to the following projects: * Asteroids@Home : It’s ironic that weeks after I started personally working on open source code at B612 Foundation who is attempting to help protect earth from catestrophic asteroid impacts that I would trip across a project about helping to define the shape of asteroids using VC. This project uses what are known as “light curve” data about observed asteroids to help refine their geometry and composition. Having this accurate is necessary for helping to more accurately predict the asteroid’s trajectory decades out. * ClimatePrediction.net : no “@Home” moniker on this one but of course the studying of climate models to help us in understanding climate change is a big thing. Unfortunatley I didn’t check what their active plaforms were and they currently only have models that work on Windows machines. I may have one of those soon so have kept it in my list. * Cosmology@Home : The physics geek in me loves the study of astrophyics even though I have only followed it superficially most of my life after taking classes on it in college. This project looks to help study cosmological and particle physics models. * LHC@Home : You’ve probably heard of the Large Hadron Collider (LHC). It was the European super-collider that was searching for the “god particle.” Before it was turned on there was popular press coverage of it possibly accidentally creating a black hole that would then destroy the Earth, Sun, solar system etc. News flash, it didn’t do that but it did find the particle it was looking for. Research continues and data sets continue to need to be crunched. Helping the particle physics people through this as well as the above Cosmology@Home project seemed like a good complement. * MilkyWay@Home : This is another physics-centric project which should help us understand fundamental particle physics, the nature of dark matter, and the like while also helping us better understand the structure of our own galaxy. * SETI@Home : This is the project that started it all, both the BOINC project and my new search for it. Yes we are actually looking for “aliens”. No we haven’t found any yet. I’ll be cynical and add that no we probably won’t find them in our lifetime either. To me it is still a worthy goal to look for them, hence some CPU cycles go to them. * Rosetta {:target="_blank}: This is the biggest outlier project out of the ones I’ve decided to contribute to. This is looking at the study of proteins and genetics. This is the foundational element of life level stuff. This sort of research is what will help us get more and more progress in the already miraculous field of gene therapies. The studies that have been using Rosetta have already contributed to vaccine research, cancer research, and more. You can find out more in their news section .

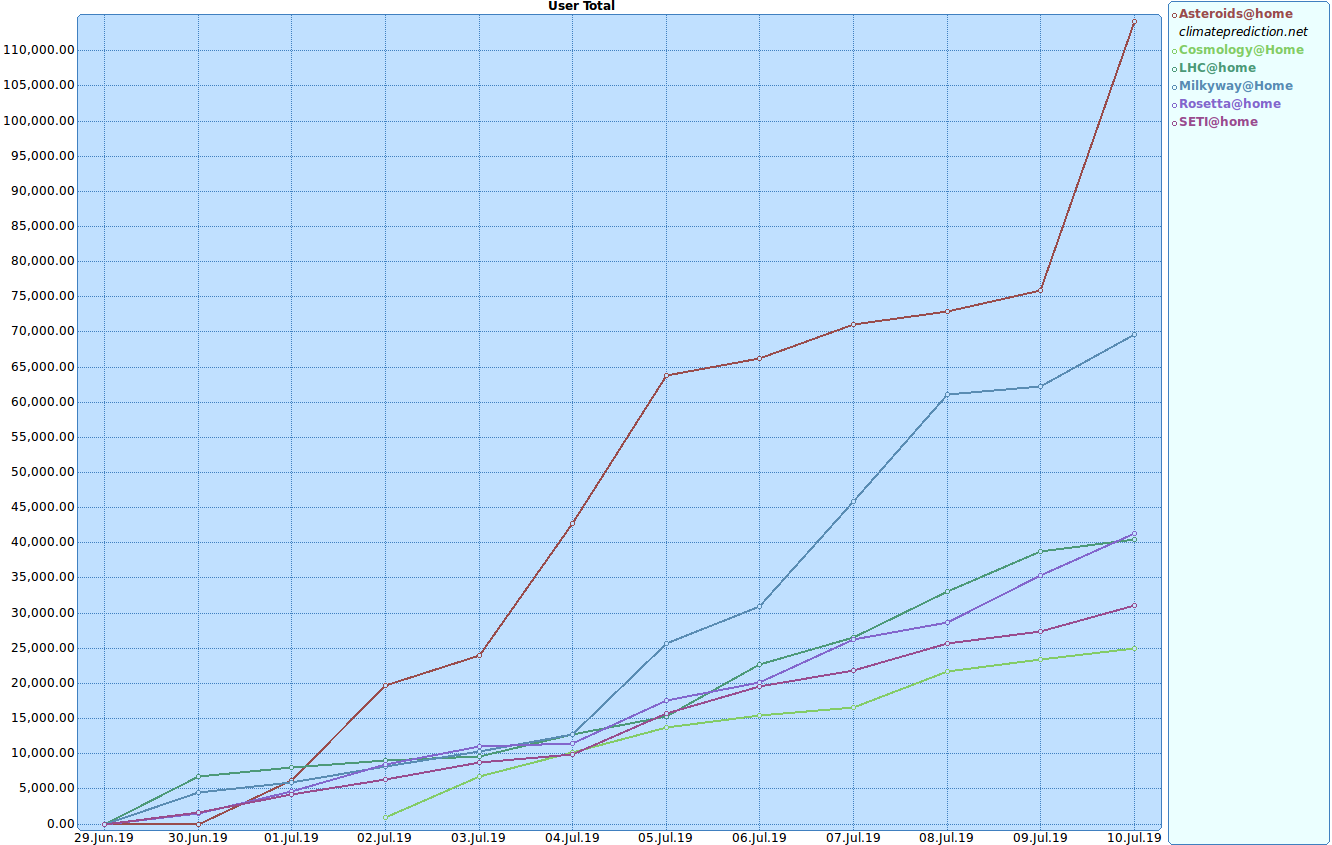

I’ve basically been running these projects since late June. I’ve installed the software on two Linux machines and two Macs. The “primary” machine I installed it on was my desktop Linux machine which it happily crunches away on its 4 physical cores. I also installed it on my MacBook Pro primary laptop, although that doesn’t run as continuously. I also had two machines laying around doing nothing: my old System76 2017 Linux laptop and my old 2011 MacBook Air. It runs full time on those as well, although in each case I am having it use 50-80% of the CPUs to make sure I’m not running them into the ground prematurely (essentially are the fans running too fast and are the internal temperatures getting too high). The net result of those contributions across those four machines feeding those seven projects (really six since Climate won’t pick up work units without a Windows box) is below:

My BOINC Contributions with all Projects in one Graph

My BOINC contributions in aggregate

Forget the stray point from June 10th, don’t know where that came from, but you can see a very linear improvement over time. Some projects are lumpier than others as the work units that were handed out or are available change in volume, such as the Asteroids one. Cosmology for example didn’t have any work units handed out to me until July 2nd for some reason. Some of these use GPUs and complete a work unit in 10-15 minutes. Others of these, like the Rosetta protein folding and some of the LHC computations can take 8-12 hours for just one computation. BOINC pauses computations part of the way through to help give time to other projects. BOINC also has settings to make sure it isn’t using the GPU when you are trying to interact with your computer and also to stop computing if you start needing more CPU resources. Yes these computations all are given a low priority but still having them churn in the background while you are playing a game or using CPU-intensive applications still would create a noticable effect would they try to avoid.

While these curves show a nearly straight up growth in total contributions (second graph) it’ll start having kinks once real world conditions kick in. I’ve been home essentially since it started so these have been running at the same level this entire time. I’m not going to leave it running when I’m traveling. I’ve also been running these machines a little harder during this shake out phase than I plan to in the long term. I’m starting to throttle my main machines more to ensure it doesn’t impact life as much. I’m throttling my secondary machines not so much to save their lifespan, which was effectively over for me at this point, but to make sure I don’t create a meltdown condition (not that therewere any signs of that in the temperature or fan data). For later this year I am toying with the idea of getting a threadripper machine with a decent graphics card that can double as a new VR system. That could help contribute a lot more to the overall system.

In the end this is something that anyone can do to help marginally improve our understanding of basic science, physics, biology, or even just look for intelligent life in the universe or the next prime number. It’s well worth looking into.

2019-07-11

in

2019-07-11

in

8 min read

8 min read